Disinformation, Democracy, Pandemic, Protest

Irina Raicu is the director of the Internet Ethics program at the Markkula Center for Applied Ethics. Views are her own.

Recently, C-SPAN has been showing the video of a panel discussion in which I participated back in February, co-organized by the Markkula Center for Applied Ethics and KQED Radio. The other panelists were Alex Stamos, director of the Stanford Internet Observatory, and Subramaniam Vincent, the director of our center’s Journalism and Media Ethics program; the moderator was Rachael Myrow, senior editor at KQED. The public event was held at the Computer History Museum in Mountain View, only weeks before such public events were banned in the ongoing effort to control the Covid-19 pandemic in the Bay Area. Our focus, at the time, was on the impact of online disinformation on democracy. You can watch the video here: https://www.c-span.org/video/?469402-1/disinformation-democracy-discussion-computer-history-museum

Two months later, I was a speaker on another panel—this one titled “Science Fact vs. Science Fiction—Misinformation in the Time of Covid 19 (Part 2).” It was organized by the Linda Hall Library in Kansas City, Missouri—a research library focused on science, engineering, and technology. This time, the panel was held via Zoom. The moderator and the panelists each spoke from home; the audience members also participated from home, from various locations with various shelter-in-place restrictions. Technology made this conversation possible, just as it also made possible the vast and rapid spread of both useful health information and the deeply harmful kind of misinformation about the pandemic that we were all reflecting on. You can watch video of that panel here: https://www.lindahall.org/event/science-fact-vs-science-fiction-part-2/

As I am writing this, mass protests are taking place across the U.S. and in other parts of the world, in response to the killing of George Floyd and other African Americans, and to the broader issues of longstanding racism and discrimination. The outrage is fueled in part by videos and reactions posted on social media, bearing witness to what happened to George Floyd and others, as well as to what is happening in the clashes between protesters and police. But much disinformation is now also spreading online about Mr. Floyd’s death itself (compounding the pain felt by his family and friends)—as well as about the protests.

A recent article in Wired magazine, titled “How to Avoid Spreading Misinformation about the Protests,” starts with a frustrated admission. “I cannot make sense of what is happening,” writes Whitney Phillips; “I say this as a scholar of polluted information, someone who talks and thinks about political toxicity more than I do anything else in my life.” After acknowledging her struggles, however, Phillips adds,

A fundamental fact of the networked environment is that none of us stand outside the information we amplify. Any move we make within a network impacts that network, for better and for worse. An ethically robust response doesn’t mean shutting down for fear of causing any harm to anyone. An ethically robust response means approaching information through the lens of harm, identifying which harms have reached the tipping point, and then doing whatever you can to approach those harms with concern for the lives and well-being of others.

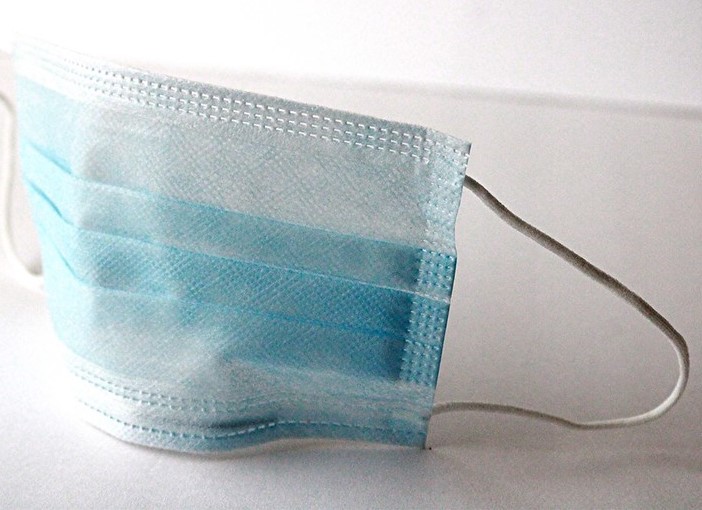

I agree, and I think her suggestions for identifying and attempting to mitigate harms are useful. However, I still think that refraining from sharing some information collected by others is warranted, when we can’t gauge the accuracy of that information. As scholar Kate Starbird has argued, we should do our best not to be “unwitting collaborators” in disinformation efforts. Delaying, at least, the sharing of some stories, until we are confident of their veracity, might be comparable to the wearing of masks in this time of pandemic—one part of a broader effort to keep ourselves from unknowingly spreading harm in our communities.

Photo by 2C2K Photography, cropped, licensed under CC BY-NC-SA 2.0