Subbu Vincent is the director of Journalism & Media Ethics at the Markkula Center for Applied Ethics. He tweets from @subbuvincent and @jmethics.

The Markkula Center for Applied Ethics and the Responsible AI Institute in the School of Engineering at Santa Clara University are happy to announce several new features of the diversity, equity, and inclusion (DEI) Audit Toolkit for News, first demonstrated in 2022. For a brief introduction to the why and what of the source diversity annotation technology for news articles, please see our original announcement.

SCU’s Source Diversity audit technology for news articles consists of a front-end WordPress dashboard plugin supported by a DEI annotation API (application programming interface) service at the back-end. The plugin helps annotate news articles on demand from within WordPress, and works for all stories, both drafts and published.

Acknowledging our collaborators: We could not have built and reached version 3 of this plugin without substantial review and testing by our early adopter newsrooms, as well as Newspack. Wisconsin Watch, Seattle Times, and The Ithacan are three early adopter newsrooms that have been beta testing the plugin with us and also proposing new features based on real-world use cases and workflows that emerged from their interests in on-demand source diversity audits. The Newspack unit at WordPress has provided a staging site for testing and compatibility for Newspack newsrooms. (e.g. Wisconsin Watch).

We have much gratitude to the following collaborators for their invaluable contributions to the design and development of this plugin.

- Coburn Dukehart, Associate Director, Wisconsin Watch

- Claudiu Lodromanean, Director of SW Engg, Newspack-WordPress

- Rick Lyons, Software Engineer, Seattle Times

- Anika Varty, Naomi Ishisaka, Matt Canham, Seattle Times newsroom

- Casey Musarra, The Ithacan

What are the new features?

The following are the new features that we have added to the WordPress plugin during June-December 2023. The features are across an entire range of functionalities: the human review system, DEI vocabulary, UX, automations, and access control.

Human Review

1. Correcting the name of a person quoted (speaker) now allows selection from existing sources with the same name.

In a small number of cases, CoreNLP (The Stanford distribution we use for detecting quotes, titles, speakers, and organizations) may get the speaker of the quote wrong or may not find a speaker (attribution). So when a journalist types in the name through our override feature (manually), if the quoted name is already in the newsroom’s sources DB, a modal will pop up and let the journalist select the correct source. If this is an altogether new source that the journalist wants to map to a quote, a new name will be created in the system when they save the override action.

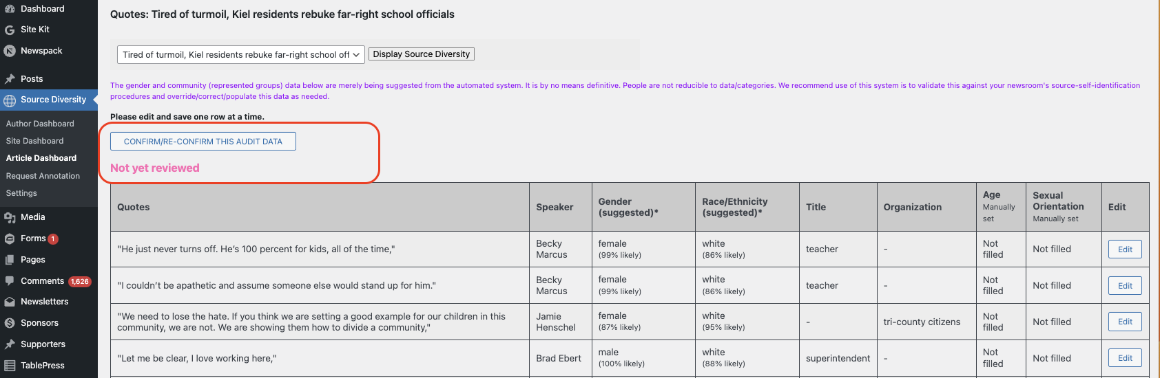

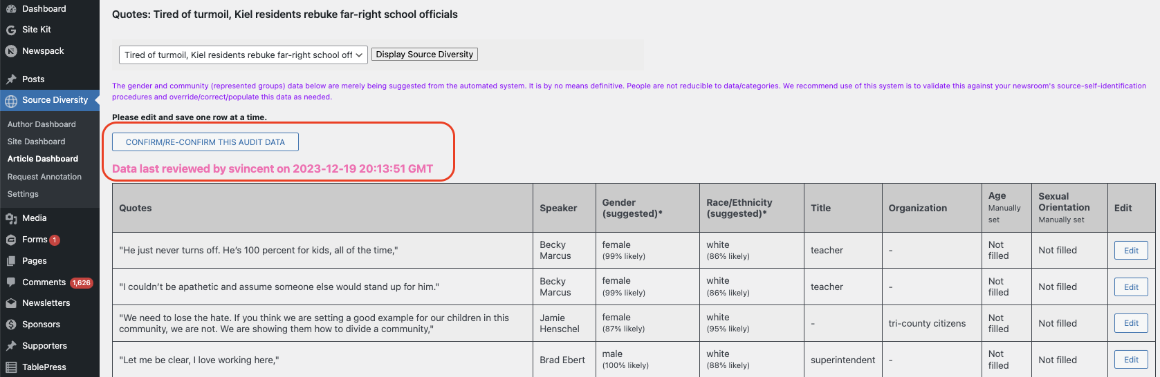

2. Review confirmation.

Newsroom staff can now confirm that they reviewed the source diversity annotations for an article, and log the date of confirmation along with the user. This is a signal that all API server’s (machine-generated) annotations for quotes and source identity (gender, race/ethnicity, title, etc.) have been manually reviewed. This means an editor or reporter or newsroom staff member will now be able to tell whether an already annotated article has been review-confirmed for the Source Diversity/DEI data or not. (Credit: Wisconsin Watch, Coburn Dukehart; Seattle Times.)

Before review

After review confirmation

DEI Audit Vocabulary

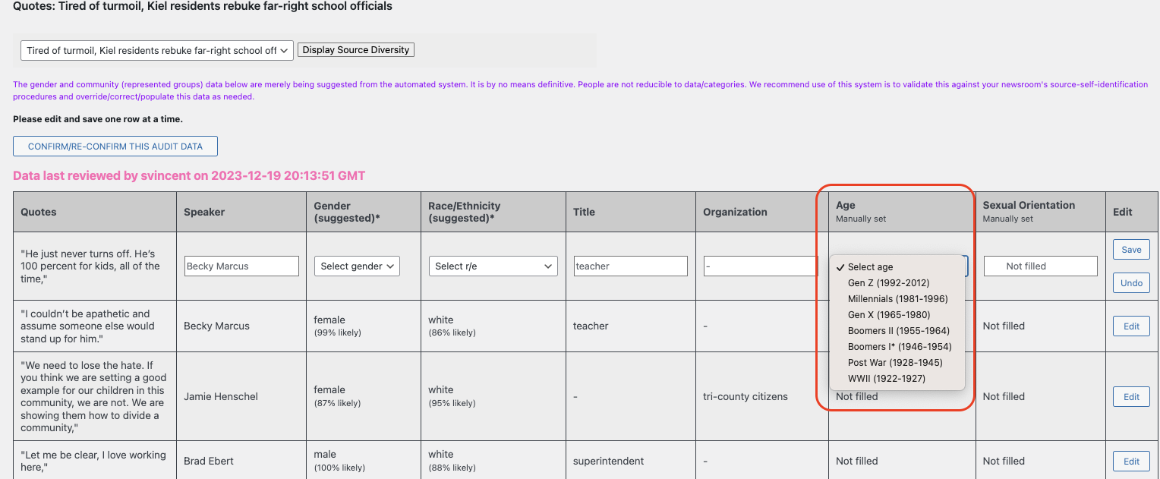

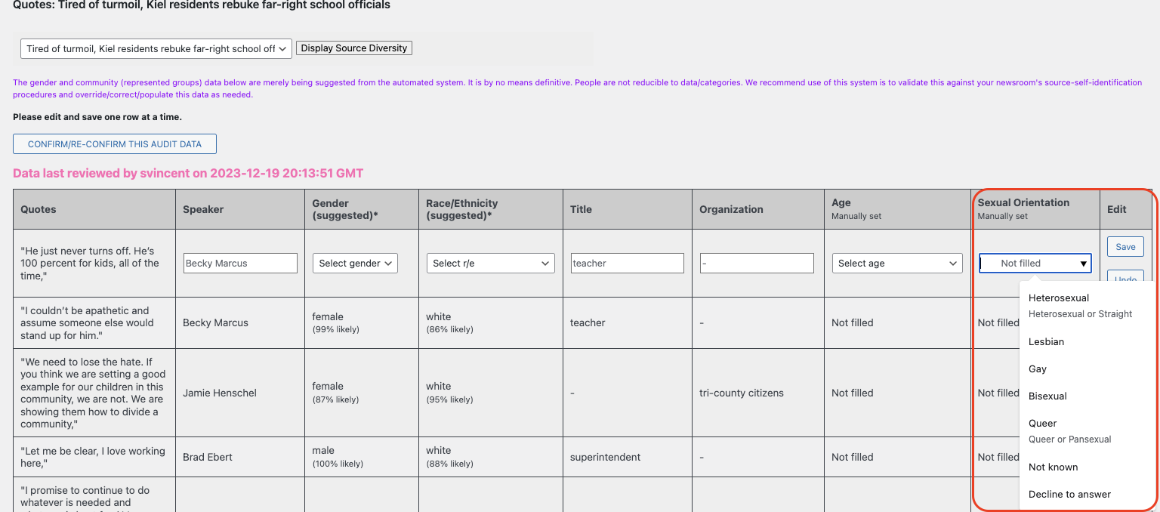

3. Age and Sexual Orientation are now additional source data for manual capture.

At the request of newsrooms, we've added Sexual Orientation and Age as a new column in the Article Dashboard quotes table. Note that this data is not machine annotated at the API server.

For each source quoted in the story, newsroom staff will be able to manually add the age (range) and sexual orientation into the article dashboard. We recognize that some sources may simply decline to state their sexual orientation, in which case that attribute will just say “Not filled”. (Credit: Seattle Times).

Age

Sexual Orientation

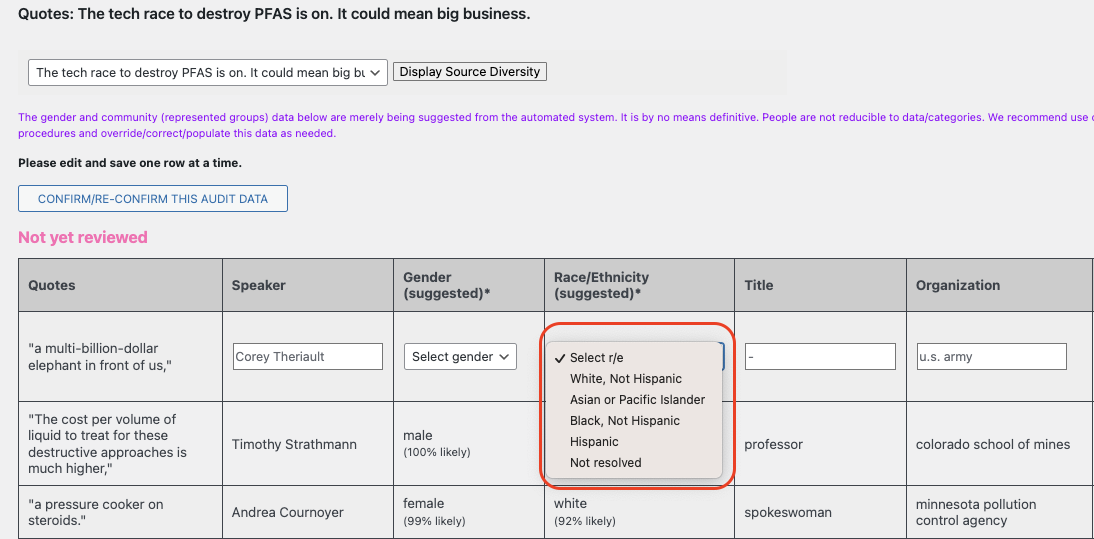

4. Machine suggested Race/Ethnicity for quoted speakers moves to four categories from two.

In our original release, for race/ethnicity (R/E) audit of quotes, our system counted quoted speakers using a simple two-category system of white vs non-white sources. The updated system now produces likely R/E suggestions for quoted speakers around four categories. White, Black, Asian, and Hispanic names. This is offered on the Article-Dashboard screen where annotations are reviewed, corrected, and saved manually.

Note: Our Article Dashboard screen always has this text on the top to ensure people do not misunderstand machine annotations for news audits as the final word.

“The gender and community (represented groups) data below are merely being suggested from the automated system. It is by no means definitive. People are not reducible to data/categories. We recommend use of this system is to validate this against your newsroom's source-self-identification procedures and override/correct/populate this data as needed.”

Training data sources and their use: Our machine learning system has used the following training data sources to suggest likely race/ethnicity and gender of quoted speakers:

- The U.S. Florida voter registration for 2017 which includes information on nearly 13 million voters along with their self-reported race.

- The U.S. 2010 census which contains summarized aggregates of counts and characteristics linked to surnames.

- We only look at the first names in the U.S. Florida voter registration for 2017 dataset for gender prediction since surnames are usually not correlated with gender.

Automation / Workflow

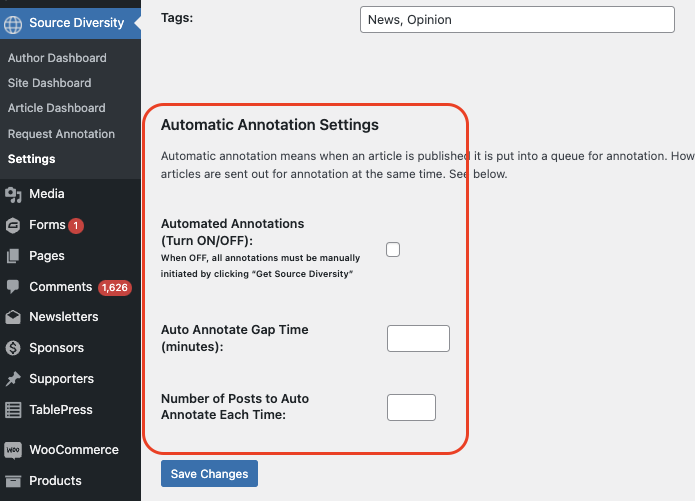

5. The plugin will auto-annotate articles when published, at regular intervals.

This feature is for newsrooms that don’t want to click the Get Source Diversity button per article manually. A newsroom needs to enable this feature in the SDD Plugin's settings. We recommend that newsrooms set the default annotation (polling and sending) intervals every 15 minutes or higher. The tool will send one article at a time. This is a conservative/safe setting for low-volume publishers. The annotations will run in the background. For very long articles, an Apache timeout will happen with the SCU API server, but the annotations will complete. Clicking the manual GSD button will fetch the completed source diversity annotations data. (Credit: Wisconsin Watch, Coburn Dukehart)

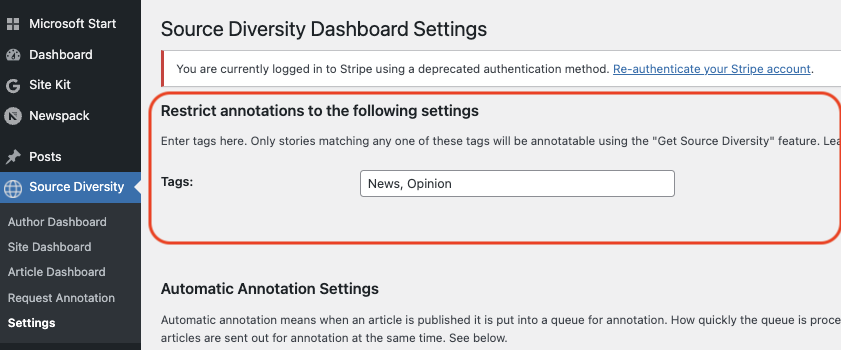

6. Restricting annotations only to articles that match a WordPress tag.

This is now a configuration setting for the plugin. This feature will ensure that the “Get Source Diversity” annotations button will work only for articles that the newsroom decides to include. For example, many news outlets publish syndicated stories from wire services and they do not want to annotate such news articles, since they did not author them. Most newsrooms use WordPress tags that differentiate between the stories they originate and others. (Credit: Wisconsin Watch, Coburn Dukehart)

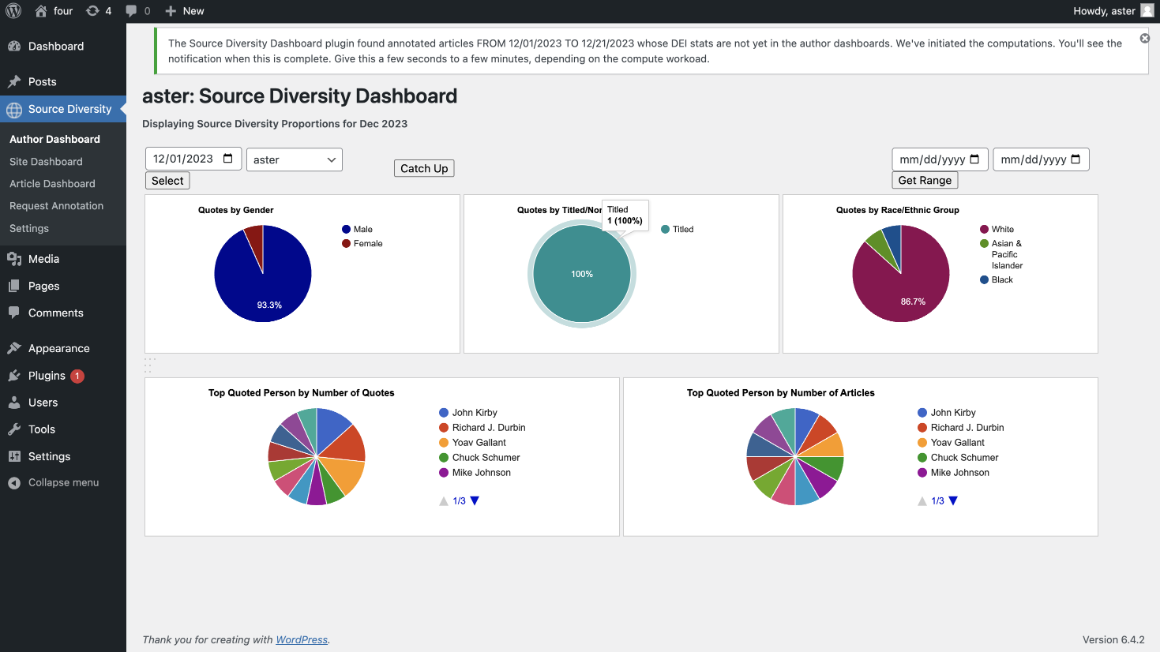

7. Catchup feature to compute aggregate stats:

We’ve now added a new stats aggregation capability called “Catchup” for the author and site dashboard. When you click Catchup, it will check the dates of all your annotated articles, compute monthly stats (for the months since the last available stats) up to the current month, and post it into the site and author dashboards. All the article-level stats – proportions of quotes by gender, race, title, top-quoted persons, etc. – are all calculated for the month as a whole. You can do this for both site level and authors’ source diversity pie charts. The main use of this feature is to review month-to-month movements in your source diversity.

Access Control

8. Restricting the plugin only for a limited set of WordPress users.

We’ve added a feature where a newsroom can select a set of user IDs that can see the Source Diversity Dashboard plugin and its menus in the WordPress system. Others will not be able to see it and use its capabilities. This allows newsrooms to engage in pilots/trials with a sub-group of journalists before deciding on opening it up to everyone. Currently, this is configured using a .php file in the WordPress plugin’s folder.

UX Improvements

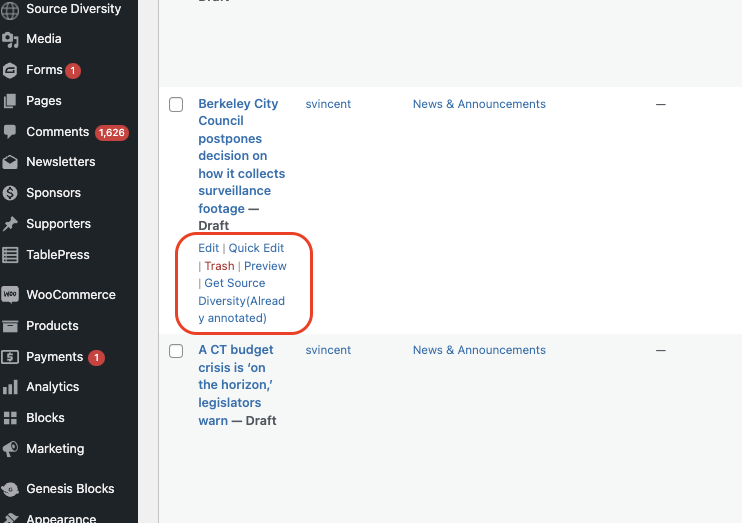

9. GSD on-demand annotations capability is now available on the WordPress Posts page.

(Credit: Seattle Times, Rick Lyons)

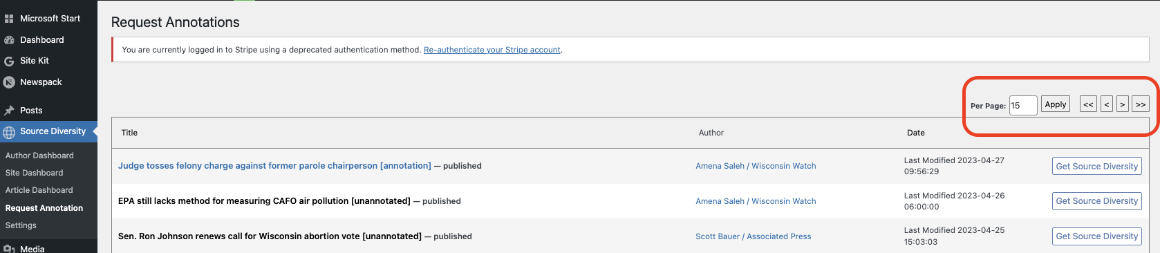

10. The Request Annotation screen for on-demand Source Diversity annotations is now paginated.

(Credit: Seattle Times, Rick Lyons).

Our original release simply listed all annotatable articles in the system in sortable order. We have now implemented a similar approach to the WordPress All Posts UX. This also made the database access more efficient, fixing a scaling issue.

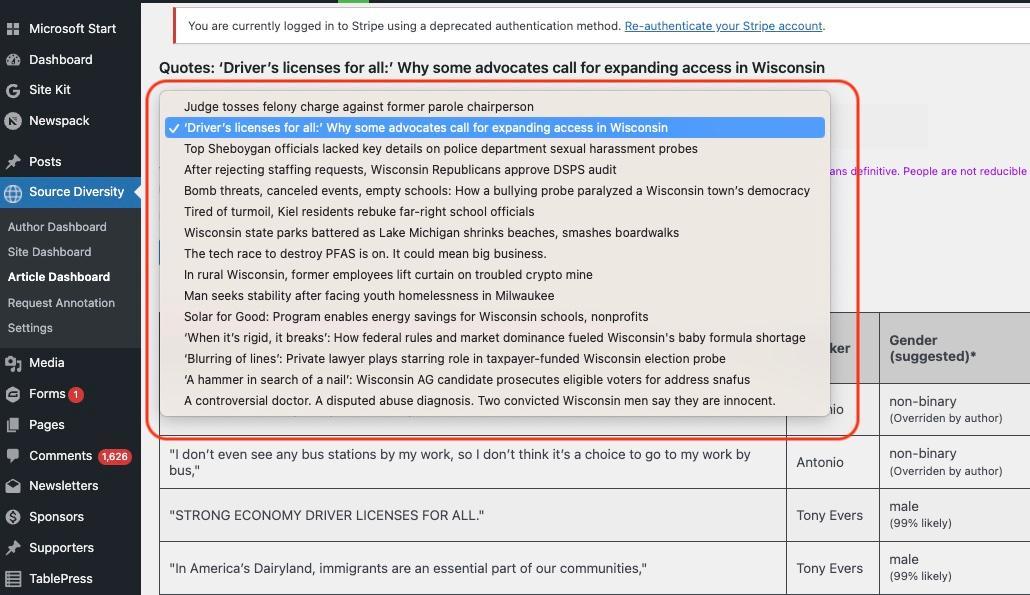

11. The Article Dashboard screen has a dropdown that lists only the 15 most recent annotated articles for the author logged in.

If the logged-in user has admin or edit privileges it will show the 15 most recent posts annotated at the newsroom as whole. (Credit: Wisconsin Watch, Coburn Dukehart).

Which organizations have tested it and are using it?

- Wisconsin Watch (a Newspack WordPress site) is both an advanced test newsroom, co-designer of features, and a current user of the plugin.

- Seattle Times has tested and customized the plugin and also proposed several features which we have implemented.

- Newspack, WordPress: We acknowledge the help of Newspack’s Claudiu Lodromanean for giving us a fully loaded WordPress Newspack staging site for stress testing features at scale in addition to local testing at SCU.

- The Ithacan (student news site of Ithaca College) is a test newsroom.

What is a typical workflow that this tool fits into?

There are many ways this plugin can be included in newsroom workflows. The answers to the following questions help decide how the tool will be used and how often.

- Who will be responsible for initiating the annotations? Would it be the reporters themselves or a newsroom staff member?

- Who will be responsible for reviewing annotated articles? The reviewer would typically have access to the verified data about the quote people, override errors with corrections and save them.

- If a reporter is not going to be doing the human review and correction, how does the newsroom staff member get access to any self-identification the quoted sources had done in conversation with the reporter?

- Will the newsroom initiate annotations per article published or do they prefer using the new automated annotations feature. If it's the latter, then the main task is to review all annotated articles periodically and save any corrections.

Examples:

- Wisconsin Watch came up with a custom workflow. They capture the verified source-identity information (ground truth) in a spreadsheet and use that to confirm or override annotation errors in the system.

- A web producer has the responsibility of checking the source data, annotating the articles, and running the human reviews.

How to get this plugin for a WordPress newsroom?

Send an email to Subbu Vincent (svincent@scu.edu) at the Journalism and Media Ethics program, Markkula Center for Applied Ethics, Santa Clara University.

The Markkula-SCU Engineering team

2023 Development and Test Engineers: Xiaoxiao Shang, Jinming Nian, Ethan Lin, Aster Li, Jil Patel (graduated) and Preeti Bhosale (graduated).

Principal Investigators: Yi Fang (Engineering) and Subramaniam Vincent (Journalism and Media Ethics)

Thank you, funders

We thank our funders for their support that helped seed and sustain this project until now: News Quality Initiative, Google News Initiative, Facebook Research, University of Texas at Austin School of Journalism and Media (Professor Anita Varma), and Santa Clara University.

Next steps in 2024

Our immediate plans are:

- Produce a how-to guide to newsrooms for using this plugin.

- Offer video training calls for everyday usage, workflow, and how to measure impact.