Harvey Chilcott '23 was a 2022-23 Hackworth Fellow with the Markkula Center for Applied Ethics, and recently graduated from SCU with a biology major and minors in philosophy and biotechnology. Views are his own.

AI ‘Therapist’: Trust It?

Woebot has potential to alleviate depression and anxiety, which affects a significant portion of society. However, Woebot possesses harms that lack transparency. Harms experienced by users, especially minors, are ignored because of the urgency to reduce depression and anxiety. This article exposes harm Woebot presents and offers a model Woebot could incorporate to enhance user outcomes.

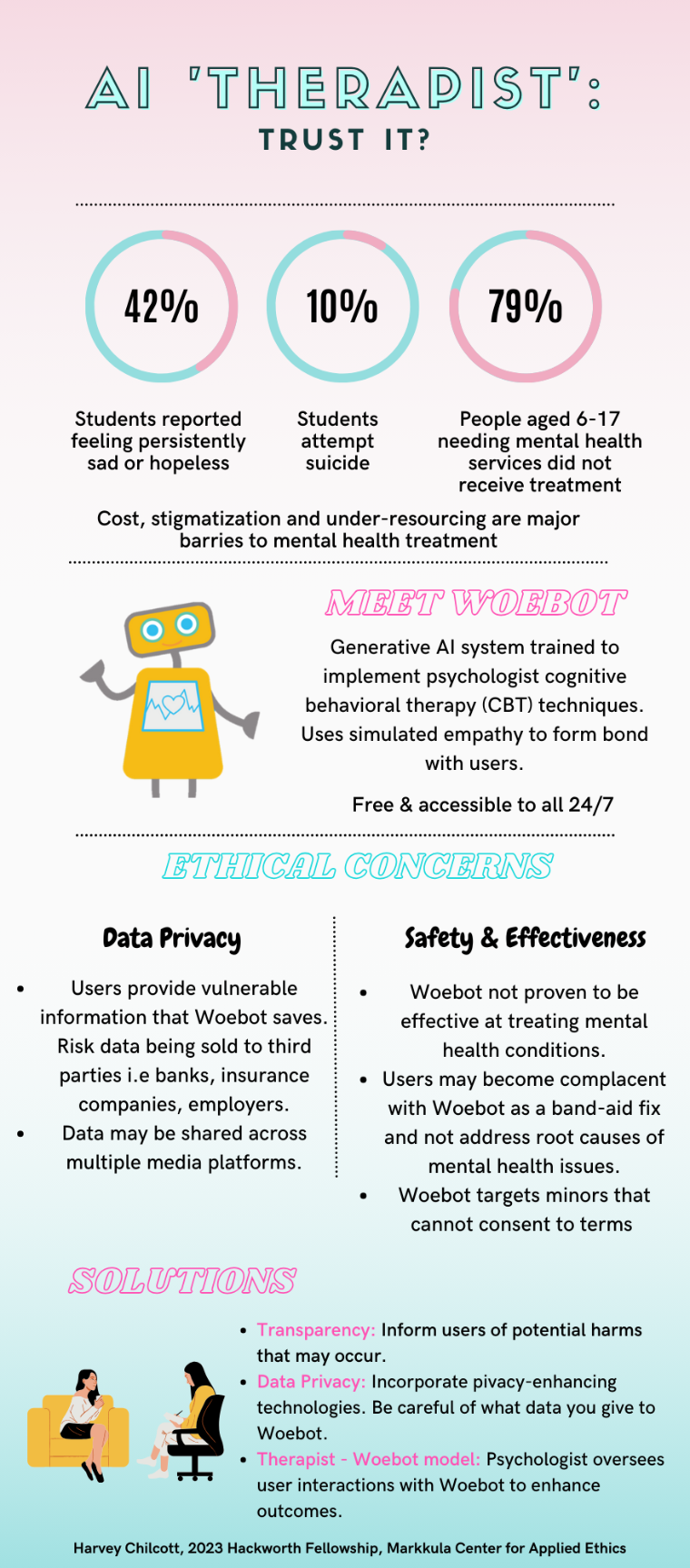

Adolescents are particularly vulnerable to mental illness. According to the CDC, 42% of US high school students reported feeling persistently sad or hopeless. Mental health deterioration has led to 1 in 5 US high school students seriously considering suicide and 10% attempting suicide. Mental health outcomes continue to decline for adolescents. 79% of US children aged 6-17 that needed mental health services did not receive mental health treatment. Stigmatization, cost and lack of resources are key factors leading to unmet adolescent mental health needs.

The overwhelming need led to developing Woebot, a generative AI system trained to implement psychologist cognitive behavioral therapy (CBT) techniques. Woebot found 22% of US adults have used mental health chat bots and half would consistently utilize one if their mental health was poor.

Woebot demonstrates potential to be a cost-effective and accessible instrument to mitigate dire unmet need for mental health resources. However, AI mental health chatbots pose serious ethical concerns. These ethical concerns are analyzed by evaluating Woebot to The White House’s recently released AI Bill of Rights, which is a blueprint for ethical AI. Clear ethical evaluation of Woebot can be achieved by 1) stating what the AI Bill of Rights proposes ethical AI looks like, 2) whether Woebot meets these ideals and 3) how to incorporate mitigation strategies. This paper focuses on data privacy criteria.

Maintaining data privacy is vital for consumers because individuals share sensitive information with AI that can cause embarrassment, stigmatization, discrimination and economic harm to users if the information was released. The AI Bill of Rights conveys that ethical AI should a) ensure individuals have agency over how their data is being used, b) that data collection is reasonable and brief, c) understandable consent is requested in order to collect consumer data, thus improving user benefits.

Individuals share incredibly sensitive information with Woebot, including mental health conditions, financial difficulties, suicidal tendencies, sexual dysfunctions and presence of disabilities. Woebot saves this sensitive information and claim to protect it through the Health Insurance Portability and Accountability Act (HIPAA), which is federal legislation adopted by hospitals to protect patient health information. However, HIPAA was promulgated in 1996, meaning its outdated nature fails to protect patient privacy from AI which has significantly developed over 27 years.

Once a patient’s data is de-identified by retracting identifiable information, HIPAA allows a users’ ‘de-identified’ information to be released to third party companies without their knowledge or consent. Hospitals in the US are currently using this technique to sell de-identified patient electronic health records (EHR) to Google and Meta. Recently, it was found that a patient’s de-identified EHR can be re-identified with a high degree of accuracy, arousing concerns of third parties potentially gaining access to a patient’s EHR. This can lead to discrimination, such as raising insurance premiums or denying promotions. Woebot possesses similar concerns.

Woebot may also gather user information and spread it across multiple platforms, including social media. Woebot was hosted on Facebook until recently, meaning that user information was subject to Facebook’s terms of privacy. This raises concerns regarding how personal data from Woebot was being used by Facebook to improve their business profits, such as placing targeted advertisements on users’ news feeds and undermining privacy by incorporating deceptive disclosures and settings that resulted in Facebook sharing private user information to third parties.

These data privacy flaws fail to meet the AI Bill of Rights because a burdensome invasion of privacy exists that sells data to surveillance companies without user consent or knowledge. Woebot can align with the AI Bill of Rights by implementing various strategies. Firstly, Woebot needs further transparency regarding how user data is being handled and give warning of potential risks. Granting users with agency over their collected information and asking for consent to sell or give de-identified data to third parties increases autonomy and mitigates privacy breaches. Lastly, efforts to ensure de-identified user information cannot be re-identified, such as using privacy-enhancing technologies (PETs) that create further anonymity and unlinkability, need to be implemented.

Woebot image courtesy of Woebot Health.

Woebot image courtesy of Woebot Health.