‘From the river to the sea’ is a phrase that will be uttered by some as an aspiration to freedom and fairness for all but heard by some as denying their right to exist, and shuts down communication.

Register and Join Us on May 2nd!

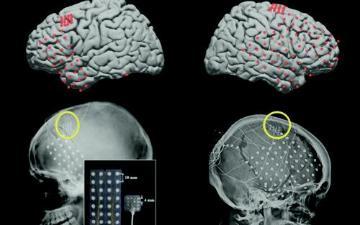

On the multifaceted ethical issues presented by brain implants and AI, and efforts to address them.

Renewing a call for careful social media sharing

On navigating the turbulent flows of deepfakes, data voids, and availability cascades.

On the "solutionless dilemma" of users being misled by chatbots

Too many users don't realize that chatbots sound certain but shouldn’t; is that a design flaw?

Questions Left Unanswered at Another Hearing Lambasting Social Media CEOs

What if companies were to invest significantly more in content moderation?

With apologies to Mary Oliver’s “Wild Geese”

When social media calls to you, harsh and exciting

Caring for the whole person in the time of chatbots

A snapshot, one year after the public release of ChatGPT

Generating Awareness

We need to be discerning about AI usage--and understand its environmental cost.

A Recent Conversation with Three Experts

Video of our recent panel discussion is now available.

Commentary by Internet Ethics Program Director Irina Raicu and colleagues